Caching can substantially reduce load times and bandwidth usage, thereby enhancing the overall user experience. It allows the application to store the results of expensive database queries or API calls, enabling instant serving of cached data instead of re-computing or fetching it from the source each time. In this tutorial, we will explore why and how to cache POST requests in Nginx.

There are only two hard things in Computer Science: cache invalidation and naming things.

— Phil Karlton

Caching POST requests: potential hazards

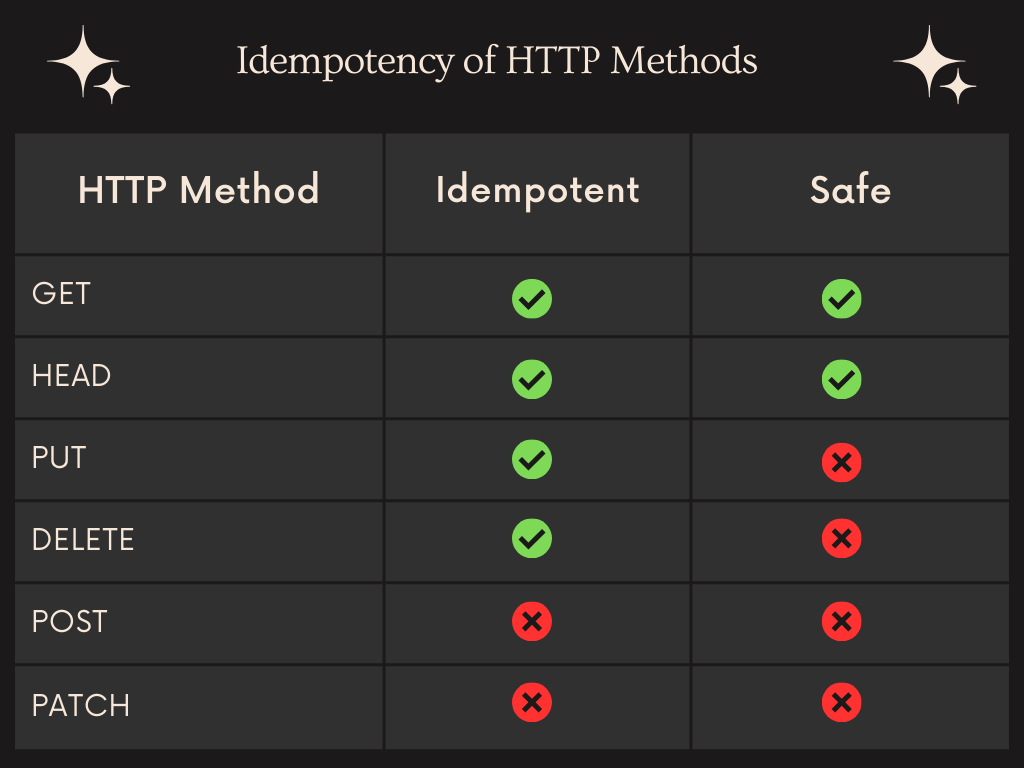

By default, POST requests cannot be cached. Their (usually) non-idempotent (or “non-safe”) nature can lead to undesired and unexpected consequences when cached. Sensitive data, like passwords, which these requests may contain, risk exposure to other users and potential threats when cached. Additionally, POST requests often carry large payloads, such as file uploads, which can significantly consume memory or storage resources when stored. These potential hazards are the reasons why caching POST requests is not generally advised.

Although it may not be a good idea to cache POST requests, RFC 2616 allows POST methods to be cached provided the response includes appropriate Cache-Control or Expires header fields.

The question: why would you want to cache a POST request?

The decision to cache a POST request typically depends on the impact of the POST request on the server. If the POST request can trigger side effects on the server beyond just resource creation, it should not be cached. However, a POST request can also be idempotent/safe in nature. In such instances, caching is considered safe.

Why and how to cache POST requests

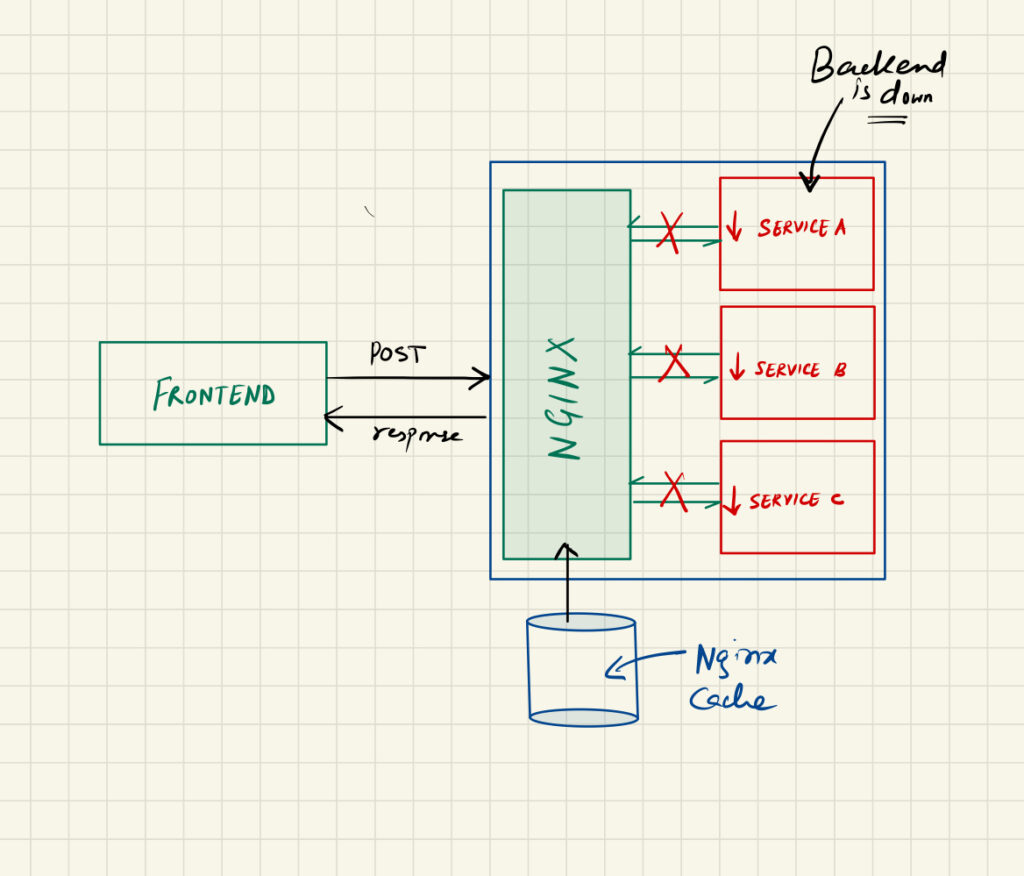

Recently, while working on a project, I found myself designing a simple fallback mechanism to ensure responses to requests even when the backend was offline. The request itself had no side effects, though the returned data might change infrequently. Thus, using caching made sense.

I did not want to use Redis for two reasons:

- I wanted to keep the approach simple, without involving ‘too many’ moving parts.

- Redis does not automatically serve stale cache data when the cache expires or is evicted (invalidate-on-expire).

As we were using Nginx, I decided to go ahead with this approach (see figure).

The frontend makes a POST request to the server, which has an Nginx set up as a reverse proxy. While the services are up and running, Nginx caches them for a certain time and in a case where the services are down, Nginx will serve the cache (even if it is stale) from its store.

http {

...

# Define cache zone

proxy_cache_path /var/cache/nginx levels=1:2 keys_zone=my-cache:20m max_size=1g inactive=3h use_temp_path=off;

...

}

location /cache/me {

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header Host $host;

proxy_set_header X-Forwarded-For $remote_addr;

proxy_pass http://service:3000;

# Use cache zone defined in http

proxy_cache my-cache;

proxy_cache_lock on;

# Cache for 3h if the status code is 200/201/302

proxy_cache_valid 200 201 302 3h;

# Serve staled cached responses

proxy_cache_use_stale error timeout updating http_500 http_502 http_503 http_504;

proxy_cache_methods POST;

# ! This is important

proxy_cache_key "$request_uri|$request_body";

proxy_ignore_headers "Cache-Control" "Expires" "Set-Cookie";

# Add header to the response

add_header X-Cached $upstream_cache_status;

}Things to consider

In proxy_cache_key "$request_uri|$request_body", we are using the request URI as well as the body as an identifier for the cached response. This was important in my case as the request (payload) and response contained sensitive information. We needed to ensure that the response is cached on per-user basis. This, however, comes with a few implications:

- Saving the request body may cause a downgrade in performance (if the request body is large).

- Increased memory/storage usage.

- Even if the request body is slightly different, it will cause Nginx to cache a new response. This may cause redundancy and data mismatch.

Conclusion

Caching POST requests in Nginx may offer a viable solution for enhancing application performance. Despite the inherent risks associated with caching such requests, careful implementation can make this approach both safe and effective. This tutorial discusses how we can implement POST request caching wisely.

Want to know how we can monitor server logs like a pro, using Grafana Loki?

Leave a Reply