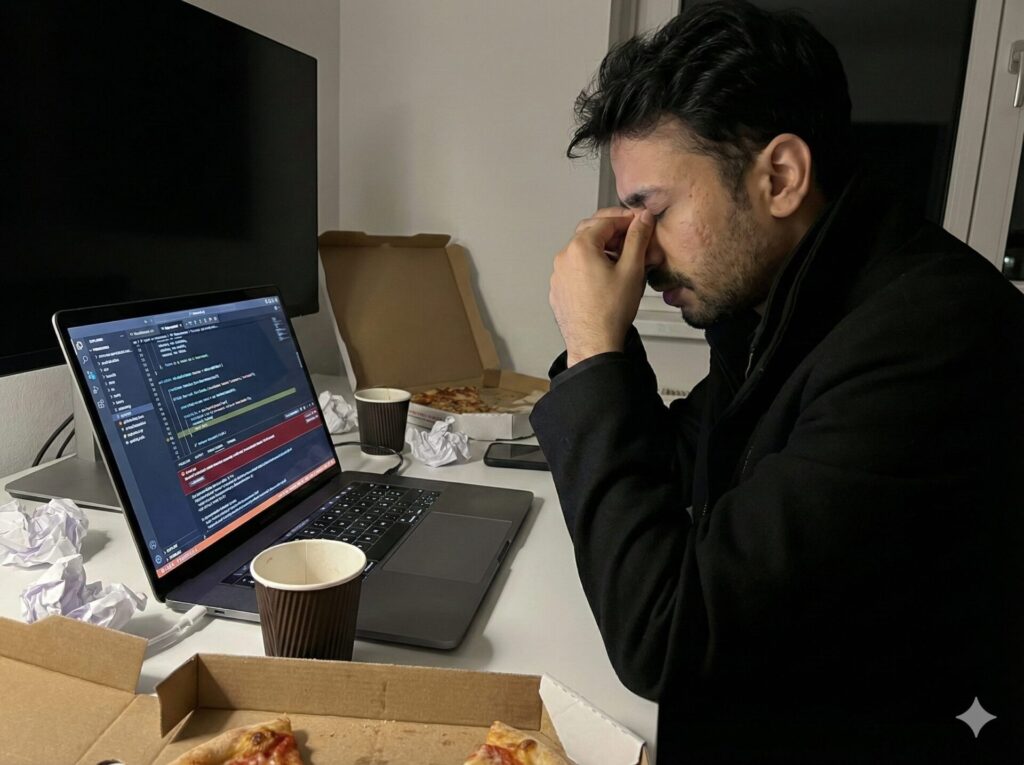

For three days, our API gateway service was hitting 504 Gateway Timeouts on requests that should have been instantaneous. The issue was blocking QA and frontend work, and the symptoms were maddeningly vague.

This is a breakdown of how we debugged it, the two false leads that cost us days, and the two lines of code that actually fixed it.

The Symptoms

- Requests to

microservice-iam(user data) were hitting the 5-second proxy limit and dying. - Requests to

microservice-app(application logic) were very slow, but often succeeding.

The False Leads

1. “It’s a Resource Issue”

When a microservices environment starts crawling, the immediate suspicion is resource exhaustion. Checking htop on the develop server showed high memory usage and a lot of context switching.

I first checked if we were accidentally throttling ourselves. I reviewed the Docker configurations to see if we had set aggressive cpus or memory limits on the containers. We hadn’t.

Convincingly pointing to hardware limitations, we decided to scale up. I migrated the environment to a significantly larger instance, added more vCPUs and doubled the RAM. I expected the latency to drop immediately.

The result: Zero change. The timeouts persisted. This confirmed it wasn’t a load problem.

2. The “Slow but Successful” Red Herring

This was the most damaging distraction. Because microservice-app requests were completing (albeit slowly) while microservice-iam requests were timing out, I assumed the infrastructure (Redis/Postgres) was fine. If Redis or Postgres was broken, everything should fail, right?

This led me to scrub the microservice-iam codebase for hours, looking for inefficient loops or bad logic specific to that service. I was looking for a bug in the service code, not the shared infrastructure.

The Investigation

We needed to isolate the bottleneck.

1. Checking the Network

I bypassed the application layer and ran a raw Node.js TCP script to handshake directly with the IAM service’s health port.

- Result:

< 5msresponse. The network was fine.

2. Checking the Database

I grabbed the exact query the application was running and ran EXPLAIN ANALYZE on Postgres.

- Result:

0.341 msexecution time. The database was fast.

The Bottleneck: Exponential Latency

If the network and DB were instant, the time had to be disappearing in the application logic.

The confusing part was microservice-app. It uses the exact same caching library and logic as microservice-iam (versioned keys, scoped lookups), so it should have been failing too.

The difference turned out to be the service’s role. microservice-app only handles application-specific logic. microservice-iam handles user data and authentication for the entire platform. Every request that hits the gateway, regardless of destination, triggers an auth check that uses microservice-iam.

It was getting hammered with exponentially higher request volume than microservice-app. While microservice-app was suffering from the same latency bug, it was just enough “under the radar” to scrape by with slow successes. microservice-iam, sitting in the critical path of everything, was being pushed over the edge.

I wrote a script to simulate the Redis call sequence directly from the container:

- Call 1: 200ms

- Call 2: 400ms

- Call 3: 800ms

- Call 4: 1.6s

- Call 5: 3.2s

By the time metadata checks were finished, over 18 seconds had passed.

The Root Cause

The issue was a conflict between our environment configuration and the Redis driver’s default behavior.

- Configuration Drift: The environment configuration for develop had a

REDIS_PASSWORDset. However, the Redis server on develop was running in a mode that did not require authentication. - Driver Behavior: We were using

Keyv, which defaults to thenode-redisdriver. When this driver detects an auth mismatch (sending a password when none is needed), it doesn’t just fail or ignore it—it enters a retry loop with an exponential back-off.

Because microservice-iam made a number of sequential calls, that back-off penalty was applied n times, compounding until the request timed out.

The Fix

We didn’t need to fix the server config (though we should); we needed the application to be resilient to this kind of drift.

We switched the underlying driver to ioredis. Unlike node-redis, ioredis handles unnecessary passwords gracefully: it logs a warning but executes the command immediately without a delay.

// Before: Implicitly using node-redis

return createKeyv(redisUri);

// After: Explicitly injecting ioredis

const redis = createRedisInstance(config);

return createKeyv(redis);After deploying this change, request durations dropped from 18 seconds to 35 milliseconds.

Takeaway

Inconsistent failure modes are often just volume issues in disguise. The fact that one service worked while another failed didn’t mean the infrastructure was healthy, it just meant one service was hitting the “poison” button more often than the other.

Leave a Reply